Recurrent Neural Network In Deep Learning With Example

Hello guys, welcome back to our blog. In this article, we will discuss recurrent neural networks in deep learning with an example, what is forward propagation, what is backward propagation, and the application of recurrent neural networks.

If you have any electrical, electronics, and computer science doubts, then ask questions. You can also catch me on Instagram – CS Electrical & Electronics.

Also, read:

- Top 100 Software Companies In The World To Work

- Applications Of Artificial Intelligence (AI) In Renewable Energy

- Top 20 Best Databases For Website And Mobile Applications

Recurrent Neural Network

What is RNN?

The RNNs are used to make use of sequential information. In a normal neural network, all inputs and outputs are independent of each other. But if you want to predict the next word in a sentence it is to better know which words came before it.

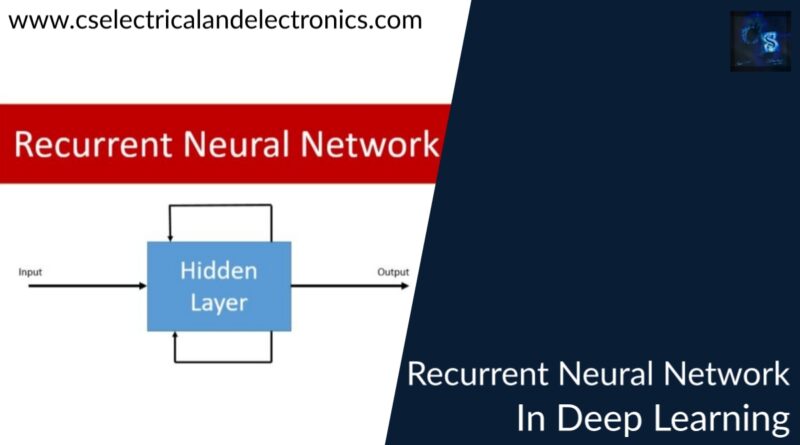

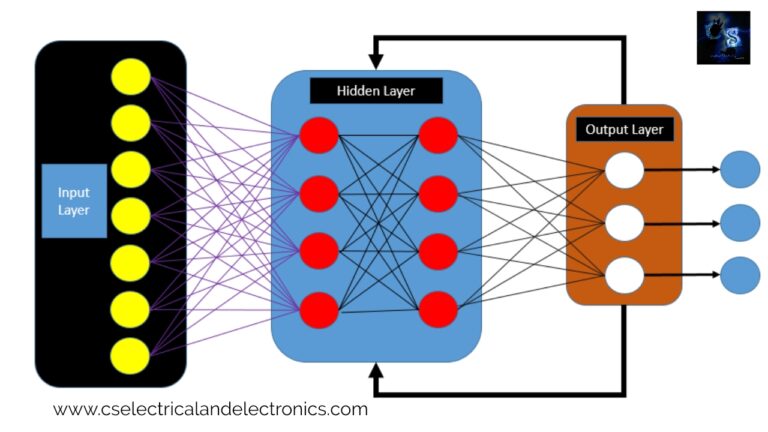

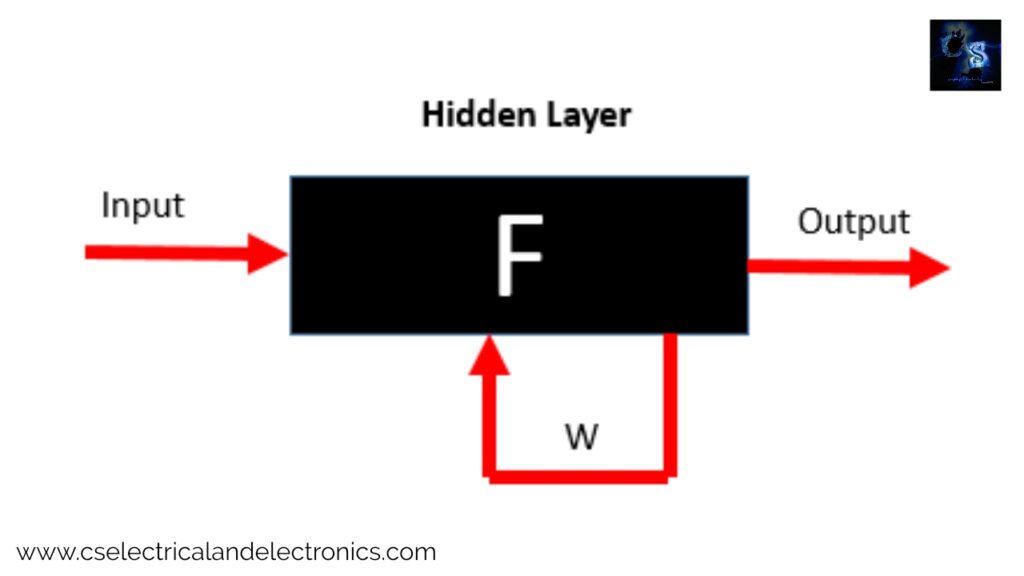

RNNs are called recurrent why because, they usually perform the same task for every element of a sequence, where output is dependent on the previous computations. Another thing about RNNs is that they will have a “memory” that captures information about what has been calculated so far. Here is what a typical RNN looks like,

Architecture and forward propagation of RNN

Forward propagation over time is the main functionality behind the working of recurrent neural networks. If you want to know about recurrent neural networks you need to know about the architecture of the recurrent neural network. The architecture of the recurrent neural network looks as below,

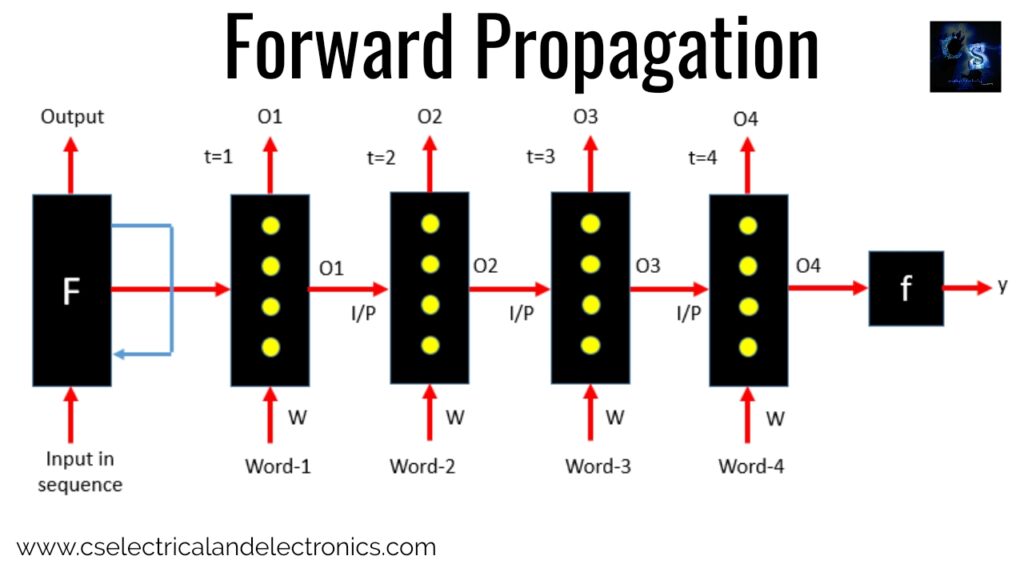

The input for the recurrent neural networks might be any dimension which means you can have any number of features. In the hidden layer, we can have any number of hidden neurons, and finally, we have output. Let’s consider one sentence as our example which is natural language propagation (NLP) for sentimental analysis. I’m discussing forward propagation. Take a sentence with 4 words. Each word should be in some dimensions or vectors.

At time t=1 The first word goes inside the particular hidden layer and assumes in the hidden layer we have 100 neurons. Then we assign some weight to this 1st word. And this word is fed to the hidden layer. In the hidden layer, we apply some functions. Now we will try to multiply the input layer by the number of neurons(100) as a result we will get the particular output. So the 1st output that we will get is,

O1 = f( word1 ×w ) This is what happens in forward propagation.

In the next step again the same output will be given to the hidden layer at time t=2. Now the 2nd word will get inside the hidden layer and the weight remains the same in the forward propagation. so now to the hidden layer, one word is input and the output of the first stage(t=1) with some weight (w’) enters as input. So we get O2 as,

O2=f ( word2×w + O1w’)

So output 2 is completely dependent on the 2nd word and O1.

A similar process will be done up to the completion of 4 words. i.e we can write O3 and O4 as,

O3 = f( word3×w + O2w’ )

O4 = f(word4×w + O3w’ )

So this is how we can keep the sequence using the recurrent neural network.

The output pool is the buddy two softmax activation function. Now another weight (w”) will be added to this output 4. The softmax will classify then it gives us the predicted value. Then you go and compute your loss function and our main aim is to reduce this loss function value. This can be reduced in backward propagation.

The complete forward propagation procedure is shown below.

Backward propagation in recurrent neural network

Backward propagation can be represented schematically exactly similar to forward propagation, but with opposite arrow marks. After calculating the loss function in the backward propagation we update the weights and in the backward propagation, we reduce the loss function.

So to reduce the loss function, first, you have to find a derivative of loss with respect to derivatives of y^. Once you calculate this now comes the technique of updating this works. The derivative can be found by the chain rule.

To update the weight of O4.

dL/dw” = dL/dy^. dy^/dw”

All these backward propagation can be used to operate all words by finding the derivatives.

Examples of recurrent neural network

We take an example of natural language propagation for this we use backup words, TF-DF, and WORD2VEC. Suppose consider in natural language propagation input data is text data and you are doing spam email classification, based on this particular input text, it should be able to derive whether it is spam email or not. If you are using preprocessing techniques like backup words, TF-DF, and WORD2VEC, these libraries convert the text data into vectors. For this let’s consider one example, assume we have 4 words as I have shown below.

Word1. Word2. Word3. Word4….

Sector. 1. 0. 1. 0……..

If you consider TF-DF numbers will be given in fractional points.

Word1. Word2. Word3. Word4

Sector. 0.1. 0.2. 0.3. 0.4

These values are nothing but vectors. The recurrent neural network has converted a sequence of words into vectors. Once the text data is converted to vectors then you can apply machine learning algorithms like nappies, And we tried to find out whether this particular sentence is positive or negative.

But in this, the sequence information is discarded, while converting text data into a vector. Once the sequence information is discarded then its accuracy deflects toward the lower side.

A recurrent neural network can be used extensively in Google’s assistant, Amazon Alexa. If you ask anything Google it gives you an answer in a particular sequence like question and answer, application, and maintaining the sequence is very important in the case of recurrent neural networks.

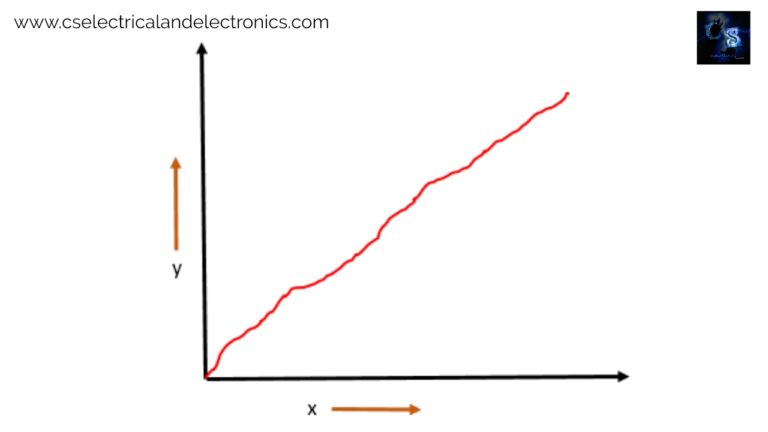

A recurrent neural network can be also used in time series. Observe the graph shown below,

Assume at the particular time which I have shown on the x-axis, we won’t find our output. The recurrent neural network makes sure that they have some time that has to be considered. So in this time series data, also recurrent neural networks will be helpful in the prediction of output.

The applications which are working on the recurrent neural network are Google image search, which gives every output in the form of images. Which means the whole text data is converted into an image. Vice versa is also possible In the application which is named image captioning. This image captioning it gives a suitable caption for the image which you will upload. These all are based on recurrent neural networks.

The Google translator is the most fundamental application in which recurrent neural network is used. It can be also used in some sarcasms.

Applications of recurrent neural network

- A recurrent neural network can be used in machine translation

- RNN can be used in robot control.

- Can we use speech recognition?

- A recurrent neural network can be used in, music composition rhythm learning,

- handwriting recognition, human action recognition.

- Used to detect protein homology

- Used to predict medical care pathways.

- Used in time series prediction and speech synthesis.

I hope this article may help you all a lot. Thank you for reading.

Also, read:

- 100+ C Programming Projects With Source Code, Coding Projects Ideas

- 1000+ Interview Questions On Java, Java Interview Questions, Freshers

- App Developers, Skills, Job Profiles, Scope, Companies, Salary

- Applications Of Artificial Intelligence (AI) In Renewable Energy

- Applications Of Artificial Intelligence, AI Applications, What Is AI

- Applications Of Data Structures And Algorithms In The Real World

- Array Operations In Data Structure And Algorithms Using C Programming

- Artificial Intelligence Scope, Companies, Salary, Roles, Jobs

Author Profile

- Chetu

- Interest's ~ Engineering | Entrepreneurship | Politics | History | Travelling | Content Writing | Technology | Cooking

Latest entries

All PostsApril 13, 2024What Is TCM, Transmission Control Module, Working, Purpose,

All PostsApril 13, 2024What Is TCM, Transmission Control Module, Working, Purpose, All PostsApril 12, 2024Top 100 HiL hardware in loop Interview Questions With Answers For Engineers

All PostsApril 12, 2024Top 100 HiL hardware in loop Interview Questions With Answers For Engineers All PostsMarch 22, 2024Driver Monitoring Systems In Vehicles, Working, Driver Sleepy Alert

All PostsMarch 22, 2024Driver Monitoring Systems In Vehicles, Working, Driver Sleepy Alert All PostsMarch 10, 2024Top 100 Automotive Interview Questions With Answers For Engineers

All PostsMarch 10, 2024Top 100 Automotive Interview Questions With Answers For Engineers